The era of Agentic AI is upon us, promising a new wave of automation where AI systems can independently reason, plan, and execute complex multi-step tasks. These sophisticated agents have the potential to revolutionize how businesses operate, from customer service to internal process automation. However, effectively harnessing this power requires a deep understanding of the architectural nuances. Two critical pillars for a robust and trustworthy agentic AI system are the modular use of Large Language Model (LLM) and a comprehensive Observability dashboard. Failing to address these can lead to inefficient, opaque, and unreliable systems.

This complexity also sharpens the classic “build vs. buy” decision, revealing why even well-resourced organizations might find building such systems in-house an overwhelming, full-time endeavor.

The LLM Orchestra: Why LLM Modularity is Key

In the realm of agentic AI, it’s tempting to seek a single, all-powerful LLM to drive every function. However, the reality is that different tasks within an agentic workflow have different requirements. A one-size-fits-all approach is rarely optimal.

- Specialized Tasks, Specialized Models: An agentic system typically involves multiple stages: breaking down requests into sub-tasks, planning execution steps, interacting with various tools and APIs, retrieving information, and generating responses. Each of these stages can benefit from an LLM optimized for that specific function. For instance:

- A powerful reasoning LLM is crucial for complex planning and calling the right agents.

- An LLM fine-tuned on specific enterprise data could handle knowledge retrieval tasks.

- A separate model might be used for generating creative or empathetic human-like responses.

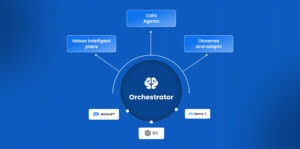

- The Leena AI agentic architecture, for example, highlights this through its Orchestrator. The orchestrator leverages multiple LLMs, including their proprietary WorkLM (fine-tuned on enterprise data), alongside models like Gemini for multimodal capabilities, Claude for high-complexity reasoning, and OpenAI models where low latency is paramount. This allows the Orchestrator to intelligently route tasks to the best-suited Agent (e.g., an IT Helpdesk Agent or an Accounts Payable Agent), each potentially powered by models fine-tuned for their specific domain.

- Benefits of LLM Modularity:

- Optimized Performance & Accuracy: Using the right LLM for the right job leads to better quality outputs and more reliable task completion.

- Cost-Effectiveness: Not all tasks require the largest, most expensive models. A modular approach allows for the use of smaller, more cost-efficient LLMs where appropriate.

- Flexibility and Future-Proofing: The LLM landscape is evolving at breakneck speed. A modular architecture allows individual LLM components to be upgraded, replaced, or fine-tuned without needing to overhaul the entire system.

- Improved Maintainability: Isolating LLM functionalities makes debugging and updates more manageable.

Peering into the Agent’s Mind: The Indispensable Transparency Dashboard

As agentic AI systems operate with increasing autonomy, understanding why they perform certain actions becomes paramount. Without transparency, these systems can become “black boxes,” making it incredibly difficult to troubleshoot issues, verify decisions, build trust, and ensure accountability. This is where a robust debugging and transparency dashboard is critical.

Such a dashboard should provide clear insights into the agent’s operations, including:

- Action Logging & Behavior Tracing: A detailed, step-by-step record of every action the agent takes, the inputs it receives, the tools it utilizes, and the decisions it makes at each juncture.

- Decision Rationale: Where possible, an explanation of the reasoning behind critical decisions, such as why a particular plan was chosen or which data source was prioritized.

- Performance Metrics: Data on task completion rates, processing times, error rates, and resource utilization to identify bottlenecks or areas for improvement. Leena AI’s Analytics component, for instance, monitors system performance, user interactions, and agent accuracy, providing crucial data points for such a dashboard.

- Error Identification and Diagnostics: Tools to quickly pinpoint where and why an error occurred, facilitating rapid debugging and correction.

- Compliance and Audit Trails: A verifiable record of agent activities is essential for regulatory compliance and internal governance, especially when agents interact with sensitive data or critical systems.

A lack of such observability not only hinders debugging but also erodes user trust and makes it challenging to ensure the agent is operating reliably and ethically.

The Ever-Evolving AI Frontier: A Reality Check

The rapid pace of development in AI means that even state-of-the-art models are continuously being refined, and “upgrades” don’t always translate to universal improvements. As a stark example, consider a hypothetical but plausible scenario: a recent report from TechCrunch, dated April 18, 2025, highlights a concerning trend: “OpenAI’s new reasoning AI models hallucinate more.” The piece might detail how even next-generation models, designed for enhanced reasoning, could exhibit higher rates of confabulation in certain contexts, underscoring the experimental phase that even industry leaders are navigating. This isn’t necessarily a step backward but rather a reminder of the “two steps forward, one step sideways” nature of current AI development.

This volatile environment significantly complicates in-house development. Teams would need to constantly evaluate new models, re-validate their systems, and adapt to unexpected behavioral shifts—a resource-intensive and highly specialized undertaking.

The “Build vs. Buy” Dilemma in the Age of Agentic AI

Building a sophisticated agentic AI system from the ground up, incorporating LLM modularity and comprehensive transparency tools, is a monumental task. It’s not just about writing code; it’s about architecting, integrating, maintaining, and continuously evolving a complex ecosystem of technologies and models.

Challenges of Internal Builds:

- Expertise Acquisition and Retention: Accessing and retaining talent with expertise in diverse LLMs, agentic frameworks, MLOps, and data engineering is a significant hurdle.

- Integration Complexity: Selecting, integrating, and orchestrating multiple LLMs, APIs, data sources (like those managed by Leena AI’s Knowledge Management component), and developing integrations with Enterprise Apps like Salesforce, ServiceNow, Workday, etc requires substantial effort.

- Development of Observability Tools: Creating a truly insightful debugging and transparency dashboard is a product development effort in itself.

- High Upfront and Ongoing Costs: The investment in infrastructure, talent, model licensing/fine-tuning, and continuous maintenance can be prohibitive.

- Keeping Pace with Innovation: The AI field’s rapid evolution means in-house solutions can quickly become outdated or require constant, intensive redevelopment. This isn’t a side project; it’s a full-time commitment for a dedicated, expert team.

Considering these challenges, the argument for “buy, don’t build” becomes compelling for many organizations. Specialized vendors offering agentic AI platforms have dedicated teams whose sole focus is to tackle these complexities. They invest heavily in R&D, continuously update their platforms with the latest LLMs and best practices, and provide robust, battle-tested components for modularity, orchestration, and transparency.

Conclusion: Strategically Navigating the Agentic AI Landscape

Agentic AI holds immense promise, but its successful implementation hinges on a carefully considered architectural foundation. Modular LLM deployment ensures optimal performance and adaptability, while comprehensive observability dashboards are vital for trust, debuggability, and accountability.

Given the profound technical depth required to design, build, and maintain these systems effectively, especially in a rapidly shifting technological landscape where even leading models present evolving challenges, organizations must critically assess their internal capabilities. For many, attempting an in-house build means diverting significant resources and focus from their core business, with a high risk of falling short.

Partnering with a specialized agentic AI solution provider, who lives and breathes these complexities, allows organizations to leverage cutting-edge AI capabilities more quickly, reliably, and often more cost-effectively. In the intricate world of agentic AI, “buy, don’t build” is frequently the most strategic path to unlocking true business value.