Introduction

In today’s multi‑agent reality, most enterprises already run a constellation of AI assistants: HR agents, IT help‑desks, finance agents, and increasingly, bespoke agents built by individual teams.

Yet these agents often operate in silos—great at their tasks, but blind to the broader context. As a result, employees juggle multiple chat windows, APIs remain underutilized, and IT teams burn cycles stitching point‑to‑point integrations.

Leena AI’s new Agent‑to‑Agent (A2A) Interoperability changes that. By providing every enterprise agent with a common language and a secure handshake, A2A enables them to communicate—and, more importantly, collaborate—so your business can function as a single, cohesive system.

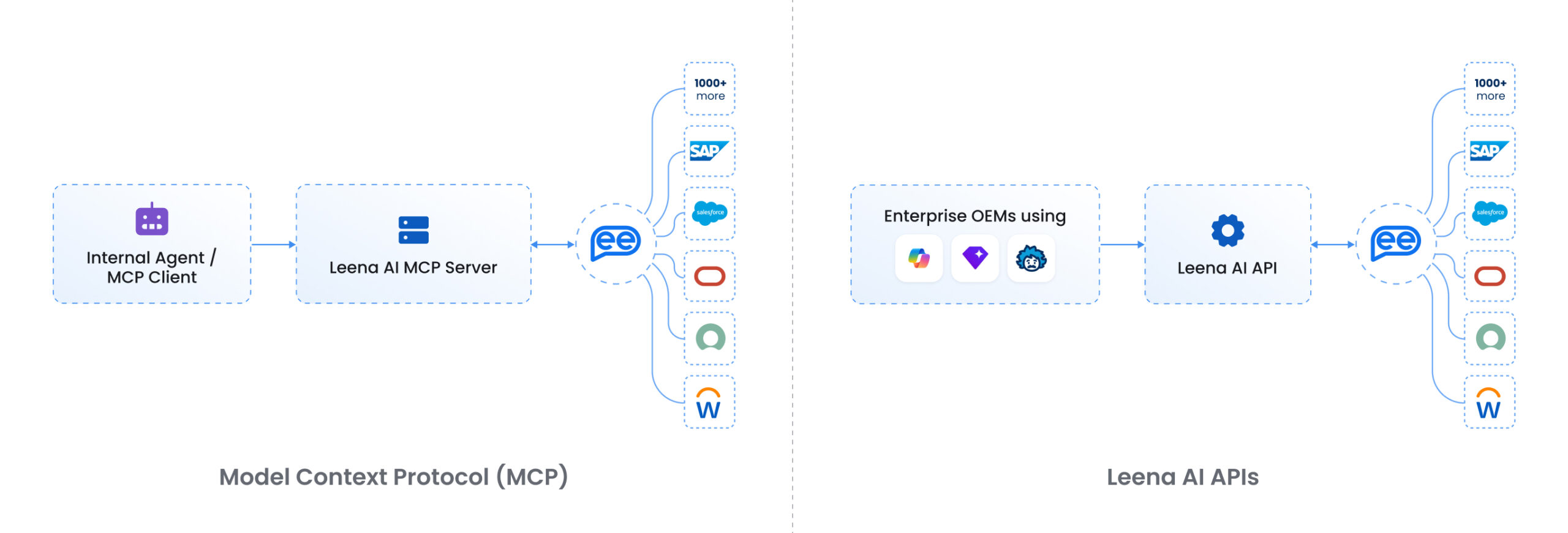

A2A vs. MCP—Complementary, Not Competing

| Model Context Protocol (MCP) | Agent‑to‑Agent (A2A) | |

| Integration Style | Vertical (one agent ↔ many tools) | Horizontal (agent ↔ agent) |

| Primary Goal | Let a single model retrieve & act on data from external tools | Allow multiple agents to delegate, coordinate, and share context |

| Analogy | USB‑C port for tools & data sources | Inter‑app messaging bus for agents |

| Coverage in Leena AI | 1,000+ tool connectors out of the box | Cross‑agent orchestration layer |

MCP gives each agent superpowers; A2A makes those superpowers collaborative.

How Leena AI Makes A2A Work

- Standards‑First Plumbing

• Model Context Protocol (MCP) for secure, two‑way data exchange.

• RESTful API fallback for platforms that haven’t adopted MCP yet. - Google‑Inspired A2A Framework

• Structured agent envelopes, role‑based permissions, and conflict‑resolution heuristics. - 1,000+ Pre‑Built Connectors

• HRIS, ITSM, ERP, CRM, and knowledge bases are ready on day one. - Zero‑Trust Security Layer

• OAuth 2.0 / SAML, fine‑grained scopes, immutable audit logs. - Transparency Dashboard

• Real‑time visibility into every inter‑agent conversation, with explainability baked in.

Why Enterprises Care (and CFOs Cheer)

- Integrations in Hours, Not Months: Drag‑and‑drop 1,000+ connectors; let agents auto‑negotiate payloads.

- Unified Employee Experience: One chat interface, limitless back‑end reach. Fewer portals, happier users.

- Self‑Service + Transactional Muscle: Reset a password and approve PTO in a single thread.

- Security You Can Sleep On: Zero‑trust auth, policy‑based controls, and traceable interactions.

- Future‑Proof Architecture: As new agents emerge, they inherit the same lingua franca—no rewrite required.

Why is relevance higher at the “Developer level” in comparison to the “Enterprise architect level”?

While the Model Context Protocol offers exciting possibilities for developers by streamlining how different artificial intelligence components talk to each other, much like a universal adapter simplifies connecting various devices, it’s also important to consider its broader impact on your overall technology architecture. When we look at the bigger picture, especially how systems securely identify and authorize each other using established methods like OAuth, integrating this new protocol can introduce unforeseen complexities. Think of it as having a new, very specialized type of connector that, while efficient for specific tasks, doesn’t easily fit into the existing, standardized power outlets across your enterprise.

Our aim is always to build robust, secure, and seamlessly integrated systems. While the Model Context Protocol is a valuable tool in a developer’s toolkit for specific integrations, its current interaction with security frameworks like OAuth suggests that it might not yet be the most straightforward solution for an overarching, enterprise-wide strategy. We’re closely watching its evolution, as the goal is to ensure any new protocol simplifies, rather than complicates, your architectural landscape, ensuring that all parts of your technology ecosystem work together smoothly and securely.

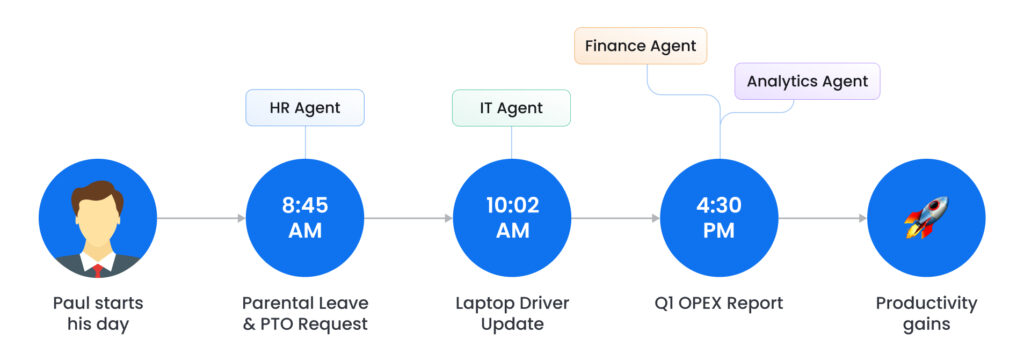

A Day in the Life: Paul’s Friction‑Free Friday

8:45 AM — Paul asks, “Is our new parental leave policy paid or unpaid? If unpaid, can you use my remaining PTO?” The HR‑Agent surfaces the policy from SharePoint and schedules PTO in Workday.

10:02 AM — His laptop needs a graphics driver. The IT-Agent communicates with the Device-Agent, triggers Jamf to install it, and updates the ticket in the same chat.

4:30 PM — Paul requests Q1 OPEX numbers. Finance‑Agent fetches the report, while Analytics‑Agent highlights a 6 % cost reduction trend.

Throughout the day, each agent shares context, so Paula never repeats herself and never leaves the chat.

Productivity gains? Priceless.

The Road Ahead

Agentic AI is moving from isolated skills to networked intelligence. With A2A, Leena AI lays the foundation for:

- Autonomous workflows that span departments without human middleware.

- Composable AI marketplaces where best‑of‑breed agents snap together like Lego.

- Explainable, auditable decision chains—essential for trust and compliance.

If your enterprise vision includes fewer silos, faster innovation, and happier employees, A2A isn’t just nice to have—it’s inevitable.

Ready to see it in action? Book a demo and watch your agents finally work as a team.

___________________________________________________________________

- What is MCP?

MCP, or Model Context Protocol, is an open-source standard designed to provide a universal way for AI models, particularly Large Language Models (LLMs), to connect with and utilize external data sources and tools. Think of it as a standardized interface that allows AI to securely access and interact with diverse information repositories like databases, APIs, local files, and SaaS applications. This enables AI to provide more relevant, context-aware, and accurate responses by drawing on information beyond its training data.

2. Why is MCP important?

MCP addresses several key challenges in making AI agents more powerful and practical:

- Standardization: It offers a consistent method for AI to interact with various resources, eliminating the need for custom integrations for each new data source or tool.

- Enhanced Capabilities: By accessing external context, AI agents can perform more complex tasks, answer questions with up-to-date information, and take actions in other systems.

- Developer Efficiency: Developers can build integrations once and have them work across different LLMs and applications that support MCP. This fosters a reusable ecosystem of “MCP servers.”

- Security: MCP aims to provide best practices for securing data within an organization’s infrastructure when accessed by AI models.

- Flexibility: It allows for easier switching between different LLM providers and vendors.

3. How does MCP work?

MCP typically follows a client-server architecture:

- MCP Host: An application (like an AI-powered chat interface or development environment) that facilitates communication.

- MCP Client: Resides within the host and makes requests to MCP servers.

- MCP Server: A lightweight program that exposes specific data sources or tools (e.g., a local file system, a GitHub repository, a database) through the standardized Model Context Protocol. It translates requests from the MCP client into actions on the resource and returns the results.

When a user interacts with an AI in an MCP-enabled host, the AI can discover available MCP servers and, with appropriate permissions, query them for relevant information or trigger actions.

4. What is A2A?

A2A, or Agent-to-Agent Protocol, is an open protocol designed to enable different AI agents to communicate, collaborate, and coordinate actions with each other, regardless of the underlying framework or vendor they were built with. Its primary goal is to foster interoperability in a multi-agent AI ecosystem.

5. Why is A2A important?

As AI agents become more specialized, the ability for them to work together becomes crucial:

- Interoperability: A2A allows agents developed by different teams or companies, using diverse technologies, to understand each other and collaborate on tasks.

- Enhanced Problem Solving: Complex problems can be broken down and tackled by multiple specialized agents working in concert.

- Flexibility and Modularity: Users can combine agents from various providers to create more powerful and customized solutions.

- Support for Complex Workflows: A2A is designed to support both quick tasks and long-running processes that may involve human-in-the-loop interaction.

6. How does A2A work?

A2A facilitates communication between a “client” agent (initiating a task) and a “remote” agent (performing the task). Key concepts include:

- Agent Card: A digital “business card” for an agent, advertising its capabilities in a standardized format (e.g., JSON). This allows for the discovery of suitable agents.

- Task-Oriented Architecture: Interactions are centered around “Tasks,” which represent a request from one agent to another.

- Standardized Communication: The protocol is often built on existing web standards like HTTP, SSE (Server-Sent Events), and JSON-RPC, making it easier to integrate with existing IT infrastructure.

- Modality Agnostic: While text is common, A2A aims to support various communication modalities, potentially including audio and video.

7. How does A2A relate to MCP?

A2A and MCP are generally considered complementary rather than competing technologies:

- MCP focuses on providing individual AI agents with access to external data and tools (connecting agents to resources).

- A2A focuses on enabling different AI agents to communicate and collaborate (connecting agents to other agents).

An agent might use MCP to gather information from a database and then use A2A to share that information or delegate a subsequent action to another specialized agent.