Introduction

Imagine if your AI assistant could plug into all your favorite apps and data sources as easily as connecting a USB-C device. That’s the promise of Anthropic’s Model Context Protocol (MCP) – a new open standard that’s causing a buzz in the AI world. In this post, we’ll explore what MCP is all about, some real-world uses people are finding for it, how it can benefit big enterprises, where it falls short, and how it stacks up against OpenAI’s Work with Apps feature. Whether you’re a developer, tech enthusiast, or business leader, read on to understand why MCP is being called the “USB-C for AI” and what that means for the future of AI integrations.

What is MCP? (Core Functionality and Purpose)

At its core, MCP (Model Context Protocol) is an open standard for connecting AI models to external data sources and tools. Think of MCP like a “USB-C port” for AI applications – a standardized plug-and-play interface that lets AI assistants access all sorts of external information from your traditional applications or databases, just as USB-C standardizes how we connect devices, MCP standardizes how large language models (LLMs) can connect to different databases, apps, and services.

Why was MCP created?

The big idea is to break AI models out of their “information silos.” Today’s best models (like Claude or GPT-4) are incredibly smart, but by default, they’re isolated from your data – they only know what’s in their training or what you paste into the prompt.

Every new connection (to, say, a database or an application) typically requires a custom integration or plugin. This doesn’t scale well; developers end up writing different connectors for different models and tools. MCP tackles this by providing one universal protocol for all such integrations.

As Anthropic describes it, MCP aims to replace fragmented one-off integrations with a “simpler, more reliable” standard so AI systems can easily get the data they need.

How does it work?

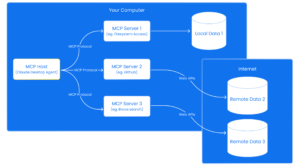

MCP uses a straightforward client-server architecture. On one side, you have AI-powered applications (clients) like the Claude Desktop app or even IDEs that want to fetch external data. On the other side, you have MCP servers – lightweight connectors that know how to talk to a specific data source or service (for example, a server that connects to Slack, or one that connects to a Postgres database). The AI app (client) can connect to many such servers. When you ask the AI a question, the client can query these servers to retrieve context (data, documents, etc.) or perform actions on local or remote applications, and feed that into the model’s prompt. Crucially, this communication is two-way – not only can the AI fetch information, but it can also trigger actions via the server (like posting a message or writing to a file).

All of this happens through standardized message types (MCP defines primitives like “Prompts,” “Resources,” and “Tools” on the server side, and “Roots” and “Sampling” on the client side to handle requests).

In plain terms, MCP gives the model a structured way to ask, “Hey, get me this piece of data” or “Please execute this function for me,” and to receive the result back.

Security and control are built into the design: the AI host (client) controls what servers it connects to and the servers control what clients are allowed to do.

This means an organization or user can strictly limit an AI’s access — you might allow it to read from a specific database but not write to it, for example. Because MCP is an open protocol, anyone can implement it. Anthropic open-sourced the MCP spec and SDKs (in Python, TypeScript, Java, etc.), so developers can build their own server or clients.

Anthropic’s goal, as stated by their dev relations head, is “to build a world where AI connects to any data source,” with MCP acting as a “universal translator” between AI and your data

What are some use cases of MCP?

Programming and Code Assistance: “AI, what does this function do?” – Developers can connect Claude (or another MCP-enabled AI) to their codebase, IDE, and documentation. Connection to documentation gives developers on-the-fly explanations or suggestions with the full context of the project’s code. This means you could have an AI pair programmer that knows about all your project files, and version control history, and can even perform actions like running tests or committing code via connected tools.

Enterprise Chatbots with Unified Data Access: One of the most touted uses of MCP is the enterprise assistant that isn’t siloed. MCP can enable chatbots to simultaneously search across Sales/CRM data, Slack Messages, Google Drive documents, etc.

In summary, MCP turns AI into an agent that can reach out and interact with the world (digital world, at least). From coding to corporate knowledge to personal productivity, the coolness factor is in having one AI interface that can pull in the right context at the right time. It’s why many in the AI community are excited – they see MCP as enabling a new generation of AI assistants that are far more useful because they’re not working blind.

Shortcomings and Limitations of MCP

With all the excitement around MCP, it’s important to also recognize its limitations and challenges. As with any new technology, it’s not a silver bullet, and early users have identified a few areas where MCP isn’t perfect:

-

- Limited to Claude (for Now): As of its launch, MCP is primarily geared towards Anthropic’s Claude models. Anthropic open-sourced the protocol hoping others will adopt it, but “MCP is a standard only for the Claude family of models right now”

- Setup Complexity (Early UX Pain Points): Early adopters have noted that using MCP in its current form can be a bit technical and clunky. You often need to run multiple MCP server processes (one for each tool/data source) on your machine or server, and then connect them to the AI client. One commentator jokingly described the current state as “having to run a million servers in my terminal locally and only being compatible with desktop apps”. For a non-developer or average user, that’s a barrier

- Tool Reliability and AI Behavior: Just because you connect a tool doesn’t mean the AI will use it correctly. One of the fundamental issues with AI agents using tools is reliability. Current AI models sometimes choose the wrong tool, use it improperly, or fail to use it when they should. In fact, internal tests show that “current models fail to call the right tool about half the time” even in well-designed agent setups. MCP doesn’t magically fix that. It provides the interface, but the AI still needs good prompting and sufficient intelligence to decide when and how to use the available tools.

- Principal Propagation for user identity: Principal propagation refers to the process of transmitting a user’s authenticated identity across multiple system components. This mechanism ensures that user-specific permissions and data access rights are consistently enforced throughout interconnected systems. In scenarios where principal propagation is absent, systems may struggle to maintain coherent security policies and deliver tailored user experiences. By default, MCP doesn’t propagate user identity to the tool calls. The absence of user identity forwarding can complicate the enforcement of fine-grained access controls, potentially leading to unauthorized data access.

- Business Process Grounding: MCP servers are designed to provide standardized access to data and tools. However, these servers may not inherently understand or integrate with the unique business processes of an organization. For instance, assume while onboarding a new sales employee you need to create their account in AD and add to 5 different security groups, an MCP server might offer a generic function to create a user in AD and another one to add a user to a security group but it would not be possible to tie both of them together.

Leena AI’s stance on MCP

The Model Context Protocol (MCP) represents an exciting step towards making AI assistants more deeply integrated into enterprise applications. Protocols like MCP indicate a future where your AI assistant can truly act as an agent, pulling information from anywhere and executing tasks for you, all while speaking in natural language. At its best, MCP could revolutionize how AI works across multiple applications, like REST revolutionized the communication across multiple applications, or how SQL revolutionized data management and analysis by providing a standardized method for querying and managing relational databases. But as the success of any protocol is determined by its widespread adoption it would be interesting to see how MCP is adopted outside of the Claude ecosystem. Currently, the adoption outside Anthropic’s ecosystem is low.

While the Model Context Protocol offers exciting possibilities for developers by streamlining how different artificial intelligence components talk to each other, much like a universal adapter simplifies connecting various devices, it’s also important to consider its broader impact on your overall technology architecture. When we look at the bigger picture, especially how systems securely identify and authorize each other using established methods like OAuth, integrating this new protocol can introduce unforeseen complexities. Think of it as having a new, very specialized type of connector that, while efficient for specific tasks, doesn’t easily fit into the existing, standardized power outlets across your enterprise.

Our aim is always to build robust, secure, and seamlessly integrated systems. While the Model Context Protocol is a valuable tool in a developer’s toolkit for specific integrations, its current interaction with security frameworks like OAuth suggests that it might not yet be the most straightforward solution for an overarching, enterprise-wide strategy. We’re closely watching its evolution, as the goal is to ensure any new protocol simplifies, rather than complicates, your architectural landscape, ensuring that all parts of your technology ecosystem work together smoothly and securely.

At Leena AI we provide our Agents capabilities to integrate with 1000+ applications. All our integrations are done keeping security in mind and ensuring users have the same level of permission that the user has in the source system. You can read our detailed architecture here.

We have started investing in the MCP ecosystem and are currently testing the efficacy of MCP servers for each of our application integrations. We will expose the MCP servers to our agents as ‘Skills’ to test the accuracy & latency of the MCP architecture.

We will be publishing our learnings by April – keep checking this space.