At its peak, Retrieval-Augmented Generation (RAG) was hailed as a quick fix for providing up-to-date, domain-specific AI knowledge. Threads on X/Twitter like “Build an enterprise RAG pipeline in 7 simple steps!” provide a quick fix to the limitations of pre-trained models, offering access to your data with minimal fuss. This initial encounter often sparks optimism.

In reality, many CIOs discover that naïve RAG implementations fall short of the hype, especially with messy real-world data.

So, is RAG dead? Spoiler: RAG isn’t dead – but it must evolve. Let’s break down why the simplistic approach is misleading and how Agentic RAG is emerging as the real solution for enterprise AI.

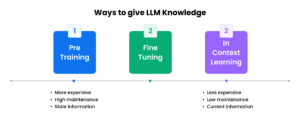

Before we dive in, it’s essential to understand the different ways you can provide knowledge to an LLM — and why RAG stands out as a compelling approach.

RAG enables LLMs to access real-time current or proprietary data without extensive re-training. Since enterprise content is frequently updated and rapidly growing, your company’s chatbot or AI assistant leveraging RAG can reference the latest policies, wikis, etc, giving more relevant answers, which makes it a more scalable approach. Additionally, it allows setting up real-time access control, ensuring each user receives answers tailored to their permissions within the organization.

Conventional RAG: The “Simple” Pipeline

At its core, a standard RAG pipeline looks straightforward. You could sketch it like this:

Put simply, the system takes a user’s question, fetches relevant documents or snippets from a knowledge source, and enriches the LLM’s prompt with this retrieved content before generating a response.

- Retriever: Typically a combination of an embedding model and a vector database, it transforms the query into a vector and locates similar text chunks from the knowledge base.

- Generator (LLM): The large language model that combines the user query with the retrieved context to produce a coherent and informed answer.

So far, so good – what’s the catch? On paper, a basic RAG pipeline is indeed easy to set up. The problem is what happens when this neat pipeline meets real-world complexity. It’s like building a simple engine in a lab versus getting it to run reliably in a Formula 1 race.

When Reality Hits: RAG’s Challenges

Real enterprise data and queries can quickly overwhelm a simplistic RAG setup. Let’s discuss a few of them:

Messy, Complex Data

In theory, your knowledge base is a nice collection of text documents. In reality, enterprise knowledge is all over the place – PDFs with tables and charts, webpages, spreadsheets, database records, and even images. A vanilla RAG pipeline often chokes on this. For example, extracting text from a complex PDF (with a mix of columns, headers, tables) is non-trivial – you need sophisticated parsing to avoid gibberish chunks. Similarly, code snippets or structured data might need special handling. If your pipeline just blindly chunks documents by character count, you risk splitting important context or including irrelevant text (e.g., page headers, legal boilerplate) in the prompt. This noise can confuse the LLM.

Retriever Errors and Omissions

The retrieval component is the gatekeeper of truth – if it fails, your LLM is flying blind. There are a few failure modes to watch:

Relevant info not retrieved: Sometimes, the needed document isn’t in the top results (or not in the knowledge base at all!). The LLM then has no correct data to work with and may end up fabricating an answer. Even if the info is there, the retriever might not grab it due to vocabulary mismatch or embedding quirks.

Irrelevant or noisy context: The retriever might return some passages that are only loosely related or contain conflicting info. Feeding that into the LLM can lead to incorrect answers if the model latches onto the wrong text. One common issue is overlapping content: if multiple docs say almost the same thing in different words, a simplistic retriever might pull several redundant snippets instead of another source that has the missing piece of info.

Semantic search gaps: Pure vector search can sometimes miss out on exact keyword matches. Conversely, it might retrieve something semantically close but contextually off.

Single-Step Reasoning Limitations

A naïve RAG pipeline does one round of retrieval per query and hands everything to the LLM in one go. But what if a question actually requires multiple hops of reasoning or pulling together information from multiple sources? For example: “How has the maternity leave policy changed between 2021 and 2024 for full-time employees?” This requires knowing the maternity leave policy for both 2021 and 2024, which might live in two different documents, and then comparing the differences, which may include changes in duration, eligibility, or benefits.

Lack of Control & Validation.

The standard RAG pipeline has little built-in oversight. The LLM is fed whatever the retriever gives, and we trust the model to use it correctly. If the model decides to ignore the retrieved text or, worse, uses it incorrectly, the system won’t catch that before the answer reaches the user. There’s no feedback loop in a naive setup to double-check, “Did we answer the question accurately with the given data?”. It might include source citations, but who verifies that those are correct? In short, the out-of-the-box RAG doesn’t know when it’s wrong – a big issue for enterprise adoption.

Given these challenges, it’s no surprise that early RAG pilots can disappoint. Does that mean we throw RAG away? Not at all – it means the era of agentic RAG has arrived.

The Rise of Agentic RAG: Making RAG Work in the Real World

To address the pitfalls above, the RAG approach itself is evolving. Think of this as RAG growing up from a quick hack into an engineering discipline. Modern, enterprise-grade RAG systems incorporate a lot more sophistication under the hood. Here are some key upgrades:

Better Data Ingestion & Chunking:

The first step is cleaning and structuring the knowledge sources. Advanced RAG pipelines use document parsers tailored to the content type – HTML cleaners for web pages, PDF extractors that preserve tables, and perhaps even OCR for scanned images. Instead of chopping text arbitrarily, they employ smarter chunking strategies (e.g., splitting documents by section headings or semantic units). The goal is to create retrieval chunks that are coherent and complete pieces of information. This reduces noise and makes it easier for the LLM to find answers. For example, a table of financial data might be indexed as a structured vector or converted to a small CSV that can be queried rather than flattened into a blob of text. If you don’t get this ingestion step right, everything downstream suffers.

Hybrid and Smarter Retrieval:

Agentic RAG often combines multiple retrieval techniques to improve accuracy:

Hybrid search blends semantic vector search with lexical keyword search. This way, you catch both conceptual similarity and exact keyword matches. For instance, combining an embedding-based search with a BM25 keyword search can yield better recall – one catches the “fuzzy” matches, and the other ensures important keywords are not overlooked. In practice, this might mean retrieving results from two systems and merging them (some use rank fusion algorithms to merge the results by confidence).

Re-ranking: Instead of trusting the raw similarity scores, an extra model (often a smaller LM or a cross-encoder) can re-read the top 20–50 retrieved snippets and score which ones are truly most relevant to the question. This second-pass reranking can dramatically improve the relevance of the final context fed to the LLM. It helps filter out those tangential matches that slip through initial retrieval.

Recursive or multi-step retrieval: If the first retrieval doesn’t fully answer the query, an advanced system can detect that and do a follow-up retrieval. For example, if a question has multiple sub-questions, the system might retrieve for one part and then use info from that result to formulate a second query. This is like the system iteratively drilling down on the knowledge base. It requires orchestration (potentially an agent controlling this process).

Handling Tables and Structured Data

Not all knowledge is in text paragraphs. Enterprises have databases, CSV exports, and tables embedded in documents. A smart RAG pipeline will treat structured data differently from free text. One strategy is to integrate SQL querying or a mini database lookup for questions that look tabular. For example, if a user asks, “How many open support tickets do we have this week?”, it’s much more reliable to run a query on the support ticket database than to rely on an LLM scanning some text summary.

The bottom line: treat your knowledge base as multimodal – text, tables, maybe images – and bring the appropriate retrieval method to each.

Agentic Orchestration

One of the biggest trends in “next-gen” RAG is adding an agent to manage the retrieval and reasoning process. In Agentic RAG, the LLM (or a collection of LLM-based agents) can decide how to answer a query by breaking it into steps, doing multiple retrievals, calling different tools, and even verifying results. Essentially, we give the LLM a bit of agency to orchestrate its pipeline. For example, an agentic RAG system could do the following for a tough problem: first identify that part of the question is about a database, so query a SQL tool; then recognize another part needs a policy document, so do a vector search in the docs; then combine those two pieces of info and ask another LLM to compose the final answer. It may also do things like query translation (rephrasing the user question to better search the docs), or metadata-based routing.

In essence, agentic RAG turns the RAG pipeline into a flexible workflow. It’s like moving from a single smart assistant to an entire team of specialized assistants coordinating with each other. Of course, this added power comes with complexity. Agentic RAG systems are harder to build and require more computing. More moving parts means more chances that something could go wrong – agents might choose a poor strategy or get stuck in a loop. And each “agent” step often means another LLM invocation (or tool call), which can increase latency and cost.

Corrective RAG

Another emerging element in Agentic RAG pipelines is post-retrieval/pre-answer validation – basically, catching mistakes before they reach the user. This is sometimes dubbed “Corrective RAG.” One approach is using a secondary model to verify the answer against the sources. For example, after the LLM drafts an answer, you might have a fact-checker model or even a simple script to ensure that each claimed fact is indeed present in the retrieved text. If something looks like a hallucination, the system could trigger an additional retrieval or ask the LLM to try again with more constraints.

Conclusion

RAG as an idea – augmenting LLMs with external knowledge – is very much alive and increasingly indispensable for enterprise AI. What’s “dead” (or dying) is the notion that a quick and dirty RAG hack is enough to solve hard knowledge problems. Simple RAG demos work great on happy-path examples, but you need a smarter approach for production. The good news is that this smarter RAG (call it RAG 2.0, “Agentic RAG”, or whatever buzzword) is rising to the challenge, incorporating better retrieval, reasoning, and validation mechanisms.