As we navigate through 2025, the AI landscape is experiencing a significant shift with the emergence of reasoning models like OpenAI’s o1 series, DeepSeek R1, and Gemini 2.5 Pro Reasoning. These models promise enhanced problem-solving capabilities through explicit “thinking” processes, but they also come with trade-offs. In this post, we’ll explore when to use reasoning models versus traditional non-reasoning LLMs, based on our practical experience at Leena AI.

Understanding the Fundamental Difference

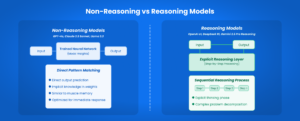

Non-Reasoning Models

Traditional LLMs like GPT-4o, Claude 3.5 Sonnet, and Llama 3.3 operate in such a way that they directly learns from the final output. They excel at generating immediate responses based on their training data, essentially functioning as sophisticated pattern-matching systems. When you ask a non-reasoning model a question, it draws on its vast training to produce an answer directly, similar to how muscle memory works – the knowledge is implicit in the model’s weights.

Reasoning Models

Reasoning models, such as OpenAI’s o1, DeepSeek R1, and Gemini 2.5 Pro Reasoning, incorporate an explicit “thinking” phase where they work through problems step-by-step before providing an answer. They typically generate intermediate reasoning steps, either visible to users or processed internally, which allows them to tackle more complex problems that require sequential thinking.

Key Factors in Production: Cost and Latency

When deciding between reasoning and non-reasoning models in production environments, two critical factors emerge:

1. Cost Considerations

Reasoning models are significantly more expensive to operate: – They generate substantially more tokens due to their chain-of-thought processes – The cost scales with response length (twice the tokens means twice the cost) – According to benchmarks, reasoning models can be 5-10x more expensive per request than standard models

2. Latency Impact

The performance difference is stark: – Reasoning models typically have higher latency due to their thinking process – Time to First Token (TTFT) can be several seconds longer – For real-time applications, this added latency can be prohibitive

Where Reasoning Models Excel

Think of reasoning models as Lego blocks – they excel when solving problems require sequential assembly of components that cannot be pre-memorized. They perform best in:

- Complex Mathematical Problems: Tasks requiring multi-step calculations where you can’t skip intermediate steps

- Advanced Coding Challenges: Debugging complex issues, implementing sophisticated algorithms

- Logical Puzzles: Problems that require systematic exploration of solution paths

- Scientific Reasoning: Tasks involving hypothesis testing and experimental design

- Multi-step Planning: Scenarios requiring careful consideration of dependencies and sequences

Where Non-Reasoning Models Shine

Non-reasoning models are like muscle memory – they excel at tasks where the reasoning is implicit or already encoded in their weights. They’re optimal for:

- Knowledge Retrieval: Answering factual questions from training data

- Text Transformation: Summarization, translation, paraphrasing

- Entity Extraction: Finding keywords, names, or specific information

- Pattern Recognition: Sentiment analysis, classification tasks

- Creative Writing: Content generation where the logical flow is more flexible

Leena AI’s Experience with Reasoning Models

At Leena AI, we’ve gained valuable insights implementing reasoning models in our Agentic RAG system, which differs from standard RAG by requiring multiple reasoning steps before providing answers. Our findings include:

The Challenge

Our Agentic RAG needs to: – Identify conflicting information across documents – Personalize responses based on user context – Determine when follow-up questions are necessary – Synthesize information from multiple sources

Initially, reasoning models seemed ideal for these multi-step processes. However, we encountered significant challenges:

Key Observations

- Inconsistent Reasoning: The same prompt could produce different reasoning paths, making the system unpredictable

- Performance Variability: Similar queries yielded varying quality of responses

- Production Reliability: The inconsistency made it difficult to maintain service quality at scale

Our Solution

Instead of relying on reasoning models, we developed a hybrid approach: 1. We crafted explicit reasoning instructions for non-reasoning models. 2. These instructions guide the model through the necessary reasoning steps 3. The result: more consistent performance with better reliability

The Trade-off

While our approach requires manual crafting of reasoning instructions for each problem type, it provides: – Predictable performance – Lower costs – Better latency – More consistent results in production

Key Takeaways

- Current reasoning models still have limitations: While promising, they’re not yet reliable enough for many production use cases requiring consistency

- Non-reasoning models can be taught to reason: With properly structured prompts and explicit reasoning instructions, traditional models can achieve similar results with better reliability

- Consider the production environment: Cost, latency, and consistency requirements often favor non-reasoning models with enhanced prompting

- Manual effort vs. automation: The trade-off between creating manual reasoning instructions and relying on automated reasoning is worth it for production stability

- The future is hybrid: As reasoning models mature, we expect to see hybrid approaches that combine the best of both worlds

Looking Forward

While reasoning models represent an exciting frontier in AI capabilities, our experience suggests that for many real-world applications, well-structured prompting with non-reasoning models offers a more practical solution today. As these technologies evolve, we anticipate that reasoning capabilities will become more reliable and eventually standard across all LLMs.

At Leena AI, we continue to explore and evaluate new models as they emerge, always balancing innovation with the practical needs of enterprise deployment. The key is not choosing between reasoning and non-reasoning models categorically, but understanding which tool fits which job – and sometimes, teaching an old model new tricks is the best approach.

Are you exploring reasoning models for your enterprise AI applications? We’d love to hear about your experiences and challenges. Reach out to our team to discuss how we’re solving these problems at scale.