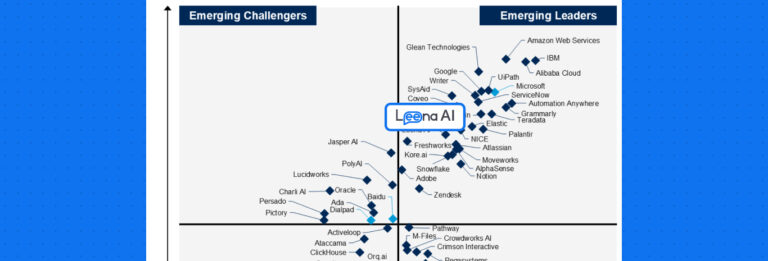

Over the past year, we’ve witnessed a seemingly paradoxical trend in the development of large language models (LLMs): while they’ve become increasingly accessible, cost-effective, and integrated into our digital ecosystem, the pace of improvement in their core text intelligence capabilities appears to have slowed considerably. At Leena AI, we’ve been closely monitoring these developments and have identified several key factors contributing to this plateau. This blog explores our analysis of the current state of non-reasoning LLMs and where the industry is headed.

The Diminishing Returns in Model Improvements

Historic Leaps vs. Incremental Gains

The transition from GPT-3.5 to GPT-4 in March 2023 represented a monumental leap in capabilities. GPT-4 demonstrated a 40% improvement in factual accuracy and an 82% reduction in the likelihood of generating unsafe content compared to its predecessor. This advance fundamentally changed what was possible with commercial LLMs and set new expectations for the industry.

Similarly, when Claude 3 was released in March 2024, its performance on benchmarks like MMLU, GSM8K, and MATH showed substantial improvements over Claude 2, often narrowing or closing the gap with GPT-4.

However, when we examine more recent model releases and updates, a different pattern emerges:

Recent model updates like GPT-4o, Claude 3.5/3.7, and Gemini 2.0/2.5 have delivered far less dramatic improvements in core text intelligence. While they’ve introduced important enhancements in other areas (which we’ll discuss later), the rate of improvement on standard NLP benchmarks has noticeably flattened.

Our analysis shows that while the jump from GPT-3.5 to GPT-4 showed a 15-25% improvement across key benchmarks, the move from GPT-4 to GPT-4o delivered only a 3-7% improvement on the same metrics. Similarly, Claude 3.5 Sonnet showed only modest gains over Claude 3 Opus in most text-based tasks.

The Last Mile Problem in AI Development

This pattern reflects a fundamental challenge in technology development that’s familiar in other domains: reaching the last 10-20% of performance improvement is exponentially harder than the first 80-90%.

As models approach human-level performance on various benchmarks, each incremental gain requires disproportionately more resources:

- Data limitations: Models are now trained on nearly all high-quality text content publicly available on the internet

- Architectural constraints: Current transformer architectures may be approaching their theoretical limits

- Evaluation ceiling: Many benchmarks are becoming saturated, making progress harder to measure

As one researcher at an AI lab explained to us, “We’re moving from the steep part of the S-curve to the flatter part. Progress will continue, but at a different pace and in different directions.”

The Multimodal Shift: Where Improvements Are Focused

While text intelligence improvements have slowed, model developers have focused significant attention on expanding capabilities in other dimensions:

Vision, Voice, and Video Integration

The most dramatic improvements in recent model releases have been in multimodal capabilities:

- GPT-4o: Significantly better image understanding, real-time voice interaction, and code generation with visual context

- Claude 3.5/3.7: Enhanced image analysis, improved chart and diagram comprehension

- Gemini 2.0/2.5: Native multimodal capabilities including video understanding

These improvements represent a broadening rather than a deepening of capabilities. Models can now work across more modalities and handle more complex visual inputs, but their core text reasoning hasn’t advanced at the same rate.

Multimodal Capabilities Growth

Chart 2: Growth in multimodal capabilities across major LLM releases in 2024-2025.

Latency and Cost Optimization

Another area of significant improvement has been in operational efficiency rather than raw intelligence:

- GPT-4o: 2x faster response generation compared to GPT-4

- Claude 3.5 Haiku: 75% cost reduction compared to Claude 3 Sonnet with minimal performance tradeoffs

- Gemini 2.0 Flash: Significantly reduced latency and token pricing

These improvements make models more practical for production applications, but don’t necessarily make them “smarter” in terms of reasoning or knowledge.

The Data Bottleneck: Quality Content Scarcity

A fundamental challenge facing LLM developers is the growing scarcity of high-quality training data.

The Internet Data Has Been Consumed

Leading models have already ingested nearly all high-quality, publicly available text on the internet. With each new model release, the pool of unexplored high-quality content shrinks further. This creates several interrelated problems:

- Diminishing returns from new web content: Most new web content isn’t adding novel information for models to learn from

- Quality filtering limits: As models consume more data, increasingly stringent quality filters must be applied to avoid training on low-quality content

- Synthetic pollution: A growing percentage of new internet content is AI-generated, creating potential feedback loops

The Synthetic Internet Problem

Perhaps the most concerning trend is the “synthetic internet” phenomenon. As LLMs generate an increasing proportion of online content, new models risk being trained on outputs from earlier models rather than genuine human knowledge.

Our research indicates that up to 30% of new text content being published online may be wholly or partially AI-generated. This synthetic content rarely contains genuinely new concepts or knowledge; instead, it tends to recycle and repackage existing information.

While some LLM-generated content does include novel human insights (when humans use LLMs primarily as writing tools rather than content generators), much of it lacks the originality and depth that would make it valuable for training future models.

Long-Tail Knowledge Gaps

Another significant limitation is in specialized domains that represent the “long tail” of human knowledge. Areas like advanced scientific research, specialized engineering, niche business domains, and highly technical fields often lack the abundant, well-explained documentation needed for models to learn effectively.

This creates a situation where models can provide broad, general knowledge but struggle with deep expertise in specialized domains, particularly those that evolve rapidly or have limited public documentation.

The Rise of Reasoning Models: A New Paradigm

In response to these challenges, the industry has begun shifting toward a fundamentally different approach: reasoning models.

The Thinking Model Revolution

The last six months have seen major releases focused on explicit reasoning capabilities:

- OpenAI’s o1/o3: Models that generate a chain of thought before answering

- DeepSeek R1: Open-weight model focused on step-by-step reasoning

- Claude 3.7 Sonnet: First hybrid model with switchable “thinking mode”

- Gemini 2.5: Google’s entry into the reasoning model space

These models represent a fundamentally different approach to intelligence. Rather than simply matching patterns learned during training, they explicitly work through problems step by step before providing an answer.

Agentic Uses and New Product Categories

This shift toward reasoning models has enabled new categories of AI products:

- Research assistants: Tools like Claude Reasoning, Perplexity, and Sourcely that can analyze complex documents

- Coding agents: Products like GitHub Copilot, Cursor, and DeepSeek Coder that can understand and modify large codebases

- LLM-powered notebooks: Interactive environments like Gemini Canvas that combine text, code, and visualization

We’re also seeing reasoning capabilities integrated directly into operating systems and productivity software, with Microsoft’s Copilot and Google Workspace leading the way.

Leena AI’s Experience with Reasoning Models

At Leena AI, we’ve explored using reasoning models in our Agentic RAG system, which requires multiple steps of reasoning before providing answers. Our findings have been instructive for understanding the current state of these models.

Where Is the Industry Headed?

Based on our analysis, we see several clear trends emerging in the LLM landscape:

1. Enterprises as the New Data Frontier

With public internet data largely exhausted, the next frontier for training data will be private enterprise data. We’re already seeing this trend materialize through partnerships:

- Microsoft’s strategic investments in OpenAI and access to enterprise platforms

- Google’s integration of Gemini across its workspace suite

- Anthropic’s partnerships with major enterprise customers

These arrangements provide access to high-quality, specialized data that isn’t publicly available, potentially enabling the next wave of model improvements.

2. Distribution and Integration as the New Battleground

As the technology matures, competitive advantage is shifting from raw model capabilities to distribution, integration, and user experience:

- Operating system integration: Microsoft Windows Copilot, Apple Intelligence

- Enterprise tool integration: Salesforce, ServiceNow, Workday, etc.

- Vertical-specific solutions: Specialized models for healthcare, legal, and finance

The companies that can seamlessly integrate LLMs into existing workflows will likely capture more value than those focused solely on incrementally improving general-purpose models.

3. New Revenue and Partnership Models

The ecosystem is evolving beyond the basic API pricing model toward more sophisticated commercial arrangements:

- Data-for-access partnerships: Enterprises providing data in exchange for custom models

- Vertical specialization: Industry-specific models with specialized knowledge

- Hybrid deployment models: Combining cloud APIs with on-premise or edge deployments

Conclusion: The End of One Era, The Beginning of Another

The apparent plateau in non-reasoning LLMs doesn’t signal the end of progress in AI – rather, it marks a transition to a new phase of development. The easy gains from scaling existing architectures on public data have largely been realized, pushing the industry toward new approaches.

We expect the next wave of breakthroughs to come from:

- Novel architectures that fundamentally improve reasoning capabilities

- Access to new sources of high-quality, specialized data

- Integration of multiple modalities and knowledge sources

- Hybrid approaches that combine the best of pattern-matching and explicit reasoning

At Leena AI, we’re excited about these developments and are working to incorporate the best of both reasoning and non-reasoning approaches into our products. We believe the future lies not in choosing one paradigm over the other, but in intelligently combining them to address the specific needs of each use case.

We’d love to hear your thoughts on these trends. Have you noticed the plateau in non-reasoning models? Are you exploring reasoning models in your work? Share your experiences in the comments below.

Note: This analysis represents our perspective based on publicly available information and our own experiences. The AI landscape is evolving rapidly, and new developments may shift these trends in unexpected ways.