We live in a world where language is at the heart of communication and understanding. From everyday conversations to complex business interactions, the power of language cannot be underestimated. Add to that the complexities of deep learning models trying to understand and generate human languages, and you have another language source. With large language models (LLMs), the boundaries of language processing and generation have been pushed even further.

What are Large Language Models?Large language models (LLM) are a revolutionary breakthrough in the field of natural language processing and artificial intelligence. These models are designed to understand, generate, and manipulate human language with an unprecedented level of sophistication. At their core, LLMs are complex neural networks that have been trained on vast amounts of textual data. By leveraging deep learning techniques, these models can capture the intricate patterns and structures inherent in language. LLMs are capable of learning grammar, semantics, and even nuances of expression, allowing them to generate text that closely resembles human-authored content. |

The development of LLMs has been a result of continuous advancements in language models over the years. From the early rule-based systems to statistical models and now deep learning approaches, the journey of language models has been marked by significant milestones. The evolution of large language models has been fueled by the availability of massive amounts of text data and computational resources. With each iteration, models have become larger, more powerful, and capable of understanding and generating language with increasing accuracy and complexity. This progress has opened up new possibilities for applications in various domains, from natural language understanding to machine translation and text generation.

Understanding the capabilities of LLMs

To truly appreciate the capabilities of LLMs, it is essential to delve into their wide range of applications. LLMs can be used for tasks such as language translation, text summarization, sentiment analysis, and even creative writing to a limited extent. They have the potential to transform the way we interact with technology, enabling more natural and human-like interactions.

One of the most remarkable features of LLMs is their ability to generate coherent and contextually relevant text. By feeding them a prompt or a partial sentence, LLMs can complete the text in a way that aligns with the given context and adheres to the rules of grammar and style. This opens up exciting possibilities for content creation, automated customer support, and personalized employee experiences.

How Large Language Models Work?

The architecture of LLMs

To grasp how Large Language Models (LLMs) operate, it’s important to understand their underlying architecture. LLMs typically follow a transformer-based architecture, which has proven to be highly effective in natural language processing tasks.

At the heart of this architecture are self-attention mechanisms that allow the model to capture the relationships between different words or tokens within a given text. This attention mechanism enables LLMs to focus on the relevant parts of the input sequence, making them adept at understanding the context and dependencies between words.

The transformer architecture also consists of multiple layers of self-attention and feed-forward neural networks, which collectively enable the model to process and generate text with remarkable fluency and coherence. By stacking these layers, LLMs can capture complex linguistic patterns and generate high-quality output.

Pre-training and fine-tuning processes

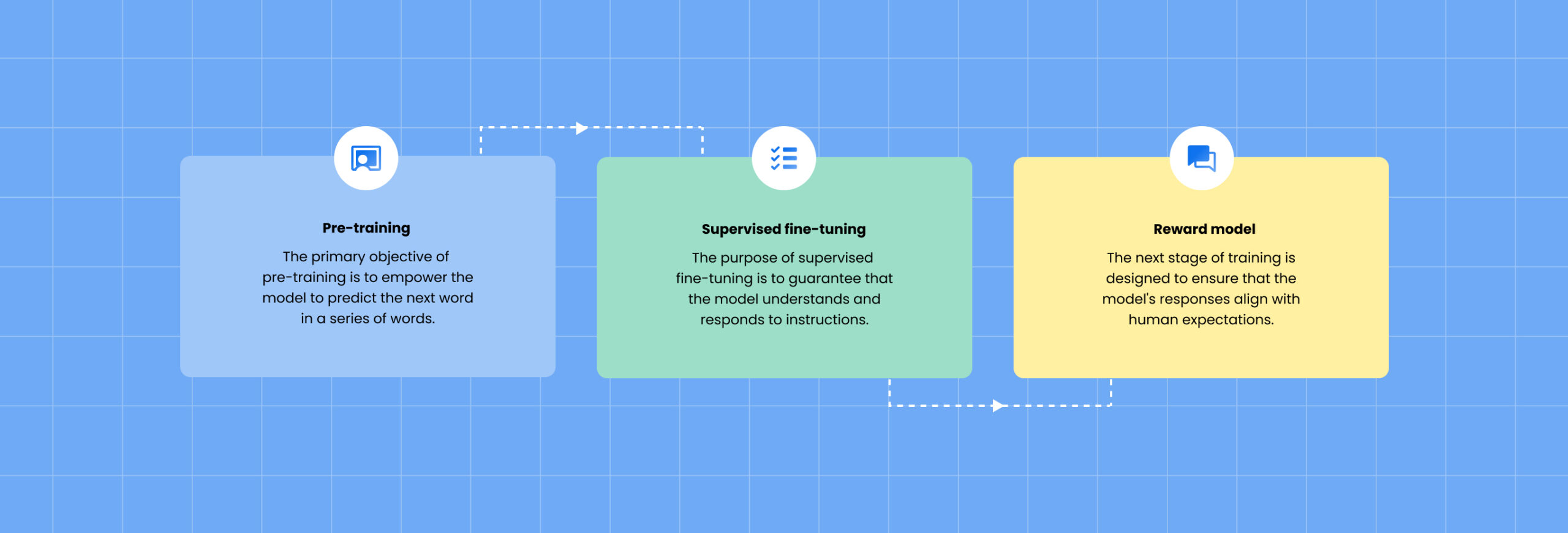

The training process of LLMs involves two key stages: pre-training and fine-tuning. Pre-training is an unsupervised process where the model learns from vast amounts of text data to acquire a general understanding of language. During this phase, LLMs predict missing words or tokens in a given text, effectively learning to fill in the gaps and grasp the underlying patterns.

Once pre-training is complete, the model enters the fine-tuning stage. Fine-tuning is a supervised process where the LLM is trained on a specific task or dataset. By exposing the model to task-specific data, such as question-answering or text completion examples, it learns to adapt its knowledge and generate output that aligns with the desired task.

This two-step process of pre-training and fine-tuning is a crucial aspect of LLMs, as it enables them to leverage the knowledge acquired from a broad range of textual data and specialize in specific tasks or domains.

Transfer learning in LLMs

LLMs excel at transfer learning, which is the ability to apply knowledge gained from one task to another related task. Transfer learning allows LLMs to generalize their understanding of language and leverage it across different applications.

By pre-training on a large corpus of text data, LLMs develop a rich representation of language that can be fine-tuned for specific tasks with relatively small amounts of task-specific data. This transfer learning capability makes LLMs highly efficient, as they can leverage their pre-existing knowledge and adapt it to new scenarios or domains.

Commonly used LLM frameworks

Various LLM frameworks have been developed to facilitate the implementation and utilization of these powerful language models. Two examples of commonly used LLM frameworks include:

- OpenAI’s GPT (Generative Pre-trained Transformer): GPT models, such as GPT-3, have gained significant attention for their ability to generate coherent and contextually relevant text across a wide range of applications.

- BERT (Bidirectional Encoder Representations from Transformers): BERT is a popular framework known for its strong performance in natural language understanding tasks. It has been widely used for tasks like sentiment analysis, named entity recognition, and text classification.

These frameworks provide a foundation for building and deploying LLMs, offering developers and researchers a starting point to leverage the power of these models in their own applications.

Applications of Large Language Models

Natural Language Processing (NLP) tasks

Natural Language Processing (NLP) tasks

Large Language Models (LLMs) have revolutionized the field of Natural Language Processing (NLP) by offering remarkable capabilities in various NLP tasks. These models can tackle tasks such as machine translation, named entity recognition, text classification, and part-of-speech tagging with exceptional accuracy and efficiency.

By leveraging the contextual understanding and linguistic patterns captured during pre-training, LLMs can process and analyze text data in a manner that closely resembles human language comprehension. This makes them invaluable in extracting meaningful insights from unstructured text, enabling businesses to derive valuable information from vast amounts of textual data.

Text generation and completion

Text generation and completion

One of the most captivating abilities of LLMs is their capacity to generate human-like text and complete partial sentences. With the power to comprehend and learn from extensive textual data, LLMs can generate coherent and contextually relevant text in a wide range of applications.

Text completion tasks, where LLMs are given a partial sentence or prompt and are expected to generate the rest of the text, have become particularly intriguing. These models can exhibit creativity and generate text that flows naturally, making them valuable tools for content generation, as well as assisting writers and content creators in their work.

Sentiment analysis and language understanding

Sentiment analysis and language understanding

Understanding the sentiment and nuances of human language is another area where LLMs shine. Sentiment analysis, a popular NLP task, involves determining the sentiment or emotion expressed in a piece of text, such as positive, negative, or neutral. LLMs can analyze the context, tone, and word usage to accurately classify the sentiment of text, providing valuable insights for businesses and researchers.

Moreover, LLMs’ deep understanding of language allows them to grasp the intricacies of human communication, including sarcasm, irony, and other linguistic nuances. This capability enhances their language understanding, making them invaluable in applications such as customer feedback analysis, social media monitoring, employee engagement, and market research.

LLMs in conversational AI and chatbots

LLMs in conversational AI and chatbots

Conversational AI and chatbot technologies have greatly benefited from the capabilities of LLMs. These models can power intelligent and natural-sounding conversations, providing engaging and interactive experiences for users.

LLMs can understand user queries, generate appropriate responses, and maintain coherent conversations by leveraging their vast knowledge and contextual understanding. They can be deployed in employee and customer support systems, virtual assistants, and chatbot platforms, enabling businesses to deliver personalized and efficient interactions.

By simulating human-like conversations, chatbots powered by LLMs can enhance HR processes, customer and employee experiences, streamline communication processes, and even automate certain tasks, saving time and resources for businesses.

Benefits and Challenges of Large Language Models

Advantages of using LLMs

Large Language Models (LLMs) offer a plethora of advantages that have made them a game-changer in various industries. Let’s explore some of the key benefits of using LLMs:

Enhanced efficiency:

Enhanced efficiency:

LLMs excel at processing and analyzing vast amounts of data in a fraction of the time it would take a human. This enables organizations to streamline their operations, automate tedious tasks, and derive insights from massive datasets more quickly. Imagine the power of having a virtual assistant that can analyze documents, answer questions, and provide valuable information with remarkable speed and accuracy.

Improved accuracy:

Improved accuracy:

LLMs leverage their pre-trained knowledge and contextual understanding to deliver highly accurate results in tasks such as language translation, sentiment analysis, and information retrieval. Their ability to learn from a wide range of data sources helps them overcome limitations faced by rule-based systems. This means more reliable language understanding, better content recommendations, and more precise text generation.

Versatile applications:

Versatile applications:

LLMs have found applications in diverse areas, ranging from legal and finance to marketing and sales. They can adapt to different domains and industries, making them flexible and valuable across various sectors. Whether it’s medical diagnosis, financial forecasting, or generating compelling marketing copy, LLMs have the potential to revolutionize how we approach various tasks.

Creative problem-solving:

Creative problem-solving:

LLMs possess a remarkable ability to generate creative and contextually appropriate text. This opens up new possibilities for content creation, brainstorming ideas, and even assisting in the generation of innovative solutions to complex problems. Imagine an AI collaborator that can help you come up with fresh ideas or assist in crafting engaging narratives. The creative potential of LLMs is truly fascinating.

Challenges associated with Large Language Models

Ethical considerations and potential biases:

Ethical considerations and potential biases:

While LLMs offer remarkable capabilities, it is important to address the ethical considerations associated with their use. One concern is the potential biases embedded in the training data used to develop these models. If the training data contains biases or reflects societal prejudices, the LLMs may inadvertently perpetuate those biases in their outputs. This can lead to unintended discrimination or unfairness in the information provided by LLMs.

It is crucial for developers and organizations to carefully curate and diversify training datasets to mitigate biases. Additionally, ongoing research and efforts are being made to develop techniques that can detect and mitigate biases in LLMs, ensuring fair and unbiased outcomes. Responsible AI practices demand continuous scrutiny and improvement to minimize bias and promote fairness in the use of LLMs.

Addressing the environmental impact of LLMs:

Addressing the environmental impact of LLMs:

The impressive computational power required to train and fine-tune LLMs comes with an environmental cost. The energy consumption and carbon footprint associated with training large models have raised concerns about their environmental impact. As we explore the remarkable capabilities of LLMs, it is essential to consider their sustainability.

Researchers and organizations are actively exploring ways to make the training process more energy-efficient and environmentally sustainable. Techniques such as model compression and knowledge distillation aim to reduce the computational resources required while maintaining the model’s performance. Furthermore, efforts are being made to develop energy-efficient hardware specifically designed for training and deploying LLMs. By prioritizing sustainability, we can harness the power of LLMs while minimizing their ecological footprint.

Security and privacy concerns with LLMs:

Security and privacy concerns with LLMs:

The vast amount of information processed and stored by LLMs raises security and privacy concerns. Fine-tuned LLMs have the potential to memorize and reproduce sensitive or confidential information encountered during training, posing risks to data privacy. Moreover, the generation capabilities of LLMs can inadvertently lead to the creation of misleading or harmful content.

To mitigate these concerns, rigorous data anonymization, encryption, and access control measures must be implemented to protect sensitive data and ensure privacy. Organizations should adhere to robust data governance practices, including data minimization and anonymization techniques, to safeguard user information while leveraging the power of LLMs.

Additionally, efforts are being made to develop advanced techniques that can detect and filter out potentially harmful or misleading content generated by LLMs. Natural Language Processing (NLP) models are being employed to identify and flag inappropriate or biased outputs, helping organizations maintain content integrity and prevent the spread of misinformation.

Furthermore, user consent and transparency play a crucial role in addressing security and privacy concerns. Organizations should be transparent about the use of LLMs, clearly communicate their data handling practices, and provide users with control over their personal information. Implementing stringent security measures, conducting regular audits, and adhering to industry best practices can help ensure the responsible and secure use of LLMs.

By acknowledging and addressing these ethical, environmental, security, and privacy challenges, we can harness the benefits of LLMs while upholding responsible AI practices. Striking a balance between innovation and accountability is vital in ensuring that LLMs contribute positively to society and empower us to navigate the complex world of language understanding and generation.

How to Get Started?

Implementing Large Language Models in Business

Identifying relevant use cases

Identifying relevant use cases

When considering the implementation of Large Language Models (LLMs) in business, it is important to identify the specific use cases that can benefit from the capabilities of LLMs. This involves understanding the challenges and opportunities within your organization that can be addressed through language understanding and generation. Some potential use cases include employee or customer support automation, content generation, market research, sentiment analysis, and data analysis.

Data preparation and model training

Data preparation and model training

Implementing LLMs requires a robust data preparation process. It involves collecting and curating relevant data sets that are representative of the specific task or domain. Preparing the data involves cleaning, preprocessing, and structuring it to ensure its compatibility with LLMs. Once the data is prepared, the model training process begins, which involves feeding the data into the LLM and fine-tuning it on the specific task or domain. This step is crucial for optimizing the model’s performance and aligning it with the desired business outcomes.

Infrastructure and computational resources

Infrastructure and computational resources

LLMs are computationally intensive and require substantial infrastructure and computational resources to run efficiently. Implementing LLMs in business often involves setting up high-performance computing environments, leveraging cloud services, or utilizing specialized hardware, such as Graphics Processing Units (GPUs) or Tensor Processing Units (TPUs). It is essential to ensure that the infrastructure can support the computational demands of LLMs to achieve optimal performance and minimize latency.

Integration with existing systems and workflows

Integration with existing systems and workflows

Integrating LLMs into existing systems and workflows is a critical step in implementing LLMs in business processes. This involves developing application programming interfaces (APIs) or connectors that enable seamless interaction between LLMs and other systems or applications within the organization. Integrating LLMs with existing workflows ensures smooth collaboration between human users and LLMs, enhancing productivity and efficiency in various business functions.

Continuous monitoring and improvement

Continuous monitoring and improvement

Implementing LLMs is an iterative process that requires continuous monitoring and improvement. Regularly evaluating the performance of LLMs, analyzing user feedback, and incorporating updates and enhancements are essential for ensuring their effectiveness and accuracy. Monitoring and fine-tuning LLMs enable organizations to adapt to evolving business needs, improve user experiences, and address any emerging challenges or biases that may arise.

Ethical considerations and responsible AI practices

Ethical considerations and responsible AI practices

Incorporating ethical considerations and responsible AI practices should be a fundamental aspect of implementing LLMs in business. This involves ensuring the transparency of AI systems, addressing potential biases, and adhering to privacy and security regulations. Organizations should establish clear guidelines and policies for the responsible use of LLMs, including data governance, user consent, and content moderation. By integrating responsible AI practices into the implementation process, businesses can maximize the benefits of LLMs while upholding ethical standards and maintaining public trust.

Build vs Buy: Choosing the Right Approach for Large Language Models

When considering the adoption of Large Language Models (LLMs), organizations often face the decision of whether to build their own LLM or leverage existing pre-trained models available in the market. This choice requires careful consideration of various factors. Let’s explore the key aspects to help you make an informed decision:

Building an LLM

Building your own LLM involves developing a language model from scratch or adapting an existing model to suit your specific needs. This approach offers greater customization and control over the model’s architecture, training data, and fine-tuning process. It allows you to address domain-specific requirements and tailor the LLM to your organization’s unique challenges.

However, building an LLM requires significant expertise in natural language processing (NLP), machine learning, and computational resources. It demands a dedicated team of data scientists, engineers, and researchers who can develop, train, and optimize the model. Building an LLM can be time-consuming and resource-intensive, requiring substantial investments in infrastructure, data, and talent.

Buying a Pre-trained LLM

Alternatively, organizations can opt to buy pre-trained LLMs available in the market. These models are already trained on vast amounts of data and offer a wide range of language understanding and generation capabilities. Buying a pre-trained LLM provides a faster route to leverage advanced language processing capabilities without investing extensive time and resources in training your own model.

By purchasing a pre-trained LLM, you benefit from the research and development efforts of experts in the field. These models often come with well-documented APIs and integration options, making it easier to incorporate them into your existing systems and applications. Additionally, pre-trained LLMs undergo rigorous testing and fine-tuning, which can result in improved performance and reliability.

However, it’s essential to consider potential limitations and constraints when buying a pre-trained LLM. The model might not be specifically optimized for your unique use cases or domain-specific requirements. You might have limited control over the training data and the fine-tuning process. Additionally, licensing agreements and costs associated with using pre-trained LLMs should be carefully evaluated.

Striking the Right Balance

When deciding between building or buying an LLM, it’s crucial to strike the right balance based on your organization’s needs, resources, and expertise. Consider the following factors:

- Time to Market: Building an LLM from scratch can be time-consuming, delaying the deployment of language processing capabilities in your organization. Buying a pre-trained LLM can provide faster time to market and quicker access to advanced language capabilities.

- Customization Needs: Assess the level of customization required for your specific use cases. If your organization demands highly tailored language models, building your own LLM might be the better choice. However, if the existing pre-trained models can meet your requirements with minor adjustments, buying can be a viable option.

- Resources and Expertise: Evaluate your organization’s resources, including data, computational power, and expertise in NLP and machine learning. Building an LLM requires substantial technical expertise and resources, while buying a pre-trained model can leverage the expertise of the provider.

- Cost Considerations: Building an LLM involves significant investments in terms of infrastructure, data acquisition, talent, and ongoing maintenance. Buying a pre-trained LLM comes with licensing costs and potentially additional fees based on usage. Evaluate the long-term costs and benefits associated with each approach.

By carefully weighing these factors, you can make an informed decision on whether to build or buy an LLM that aligns with your organization’s goals, capabilities, and budget.

Remember that both approaches have their own advantages and considerations. Some organizations might find it beneficial to pursue a hybrid approach, where they build upon a pre-trained model and fine-tune it to their specific requirements. This allows for a balance between customization and leveraging existing expertise.

Ultimately, the decision between building and buying an LLM should align with your organization’s long-term strategy, timeline, resource availability, and specific use cases. Consider engaging with domain experts and consulting with NLP specialists to gain a deeper understanding of the trade-offs and potential outcomes associated with each approach.

It’s also important to stay updated on the latest advancements in the field of LLMs, as new pre-trained models and tools continue to emerge. Regularly reassess your needs and the evolving landscape to ensure you’re making the most informed decisions for your organization.

By carefully evaluating the build vs buy options and considering your organization’s unique requirements, you can make a confident choice that sets you on the path to effectively harnessing the power of Large Language Models.

Building versus buying a new product or piece of software for an organization is a difficult decision. Therefore, it is essential to follow a structured approach when deciding whether to build versus buy.

We also recommend exploring our comprehensive e-book, The Ultimate HRSD Build vs Buy Framework Decision Guide.

Decoding HRSD: The Ultimate Build vs Buy Framework Decision Guide.

Read NowBest Practices for Adopting Large Language Models

Define clear objectives and use cases

Define clear objectives and use cases

Before adopting Large Language Models (LLMs), it is crucial to define clear objectives and identify specific use cases where LLMs can provide value. Clearly articulate the problems you aim to solve or the opportunities you seek to leverage through LLM implementation. This clarity will guide your decision-making process and ensure that LLMs are aligned with your organization’s strategic goals.

Start with smaller pilot projects

Start with smaller pilot projects

Implementing LLMs can be complex and resource-intensive. To mitigate risks and gain valuable insights, consider starting with smaller pilot projects. These pilot projects allow you to assess the feasibility and effectiveness of LLMs in a controlled environment. By starting small, you can fine-tune your approach, address any challenges, and validate the value proposition of LLMs before scaling up.

Invest in high-quality data

Invest in high-quality data

Data quality is paramount when working with LLMs. Invest time and effort in acquiring and curating high-quality training data that is representative of your specific use case. Ensure that the data is relevant, diverse, and properly labeled or annotated. High-quality data sets will enhance the performance and accuracy of your LLMs, leading to more reliable outcomes.

Collaborate between domain experts and data scientists

Collaborate between domain experts and data scientists

Successful adoption of LLMs requires collaboration between domain experts and data scientists. Domain experts possess deep knowledge of the industry, business processes, and customer needs. Data scientists bring expertise in machine learning and LLM technologies. By fostering collaboration and knowledge exchange, you can ensure that LLMs are tailored to the specific domain requirements and effectively address real-world challenges.

Establish robust evaluation metrics

Establish robust evaluation metrics

Define clear evaluation metrics to assess the performance and impact of LLMs. Establish quantitative and qualitative measures that align with your objectives and use cases. Metrics can include accuracy, efficiency, customer satisfaction, or business outcomes such as revenue or cost savings. Regularly monitor these metrics and make adjustments as needed to optimize LLM performance and demonstrate return on investment.

Ensure explainability and interpretability

Ensure explainability and interpretability

LLMs can sometimes be seen as black boxes due to their complexity. It is important to ensure explainability and interpretability of LLM outputs. Develop methods and tools to understand how LLMs arrive at their predictions or generate text. This transparency enables stakeholders to trust the decision-making process and facilitates regulatory compliance, especially in sensitive domains.

Foster a culture of continuous learning

Foster a culture of continuous learning

LLMs are continuously evolving, and new research and advancements emerge regularly. Foster a culture of continuous learning within your organization to stay updated with the latest developments in LLM technology. Encourage knowledge sharing, attend conferences or webinars, and engage with the broader AI community. This ongoing learning will enable you to harness the full potential of LLMs and remain at the forefront of innovation.

Regularly assess and mitigate biases

Regularly assess and mitigate biases

Bias can inadvertently be present in LLMs due to the data they are trained on. Regularly assess LLM outputs for any biases that may arise, such as gender or racial bias. Implement mechanisms to identify and mitigate biases, including diversity in training data, bias detection tools, and rigorous testing. Striving for fairness and inclusivity in LLM outputs is essential for responsible and ethical AI adoption.

Ethical Considerations and Responsible AI

In the era of Large Language Models (LLMs), it is crucial to address the ethical considerations and ensure responsible AI practices. As LLMs become more prevalent and influential, we must prioritize fairness, transparency, accountability, and the overall impact on society. Here are some key areas to focus on:

Bias Mitigation and Fairness in LLMs

One of the significant concerns with LLMs is the potential for bias in their outputs. Bias can stem from the training data used, which may reflect societal biases and inequalities. It is essential to develop techniques to mitigate bias and promote fairness in LLMs. This includes diversifying training data, carefully curating datasets, and implementing bias detection and mitigation algorithms. Regular audits and evaluations should be conducted to identify and address any biases that may arise.

Guidelines for Ethical Use of LLMs

Developing and adhering to clear guidelines for the ethical use of LLMs is crucial. Organizations should establish policies and principles that outline the responsible deployment and application of LLMs. This includes respecting user privacy, protecting sensitive information, and ensuring compliance with legal and regulatory frameworks. Clear guidelines also help prevent the misuse of LLMs, such as generating malicious content or spreading misinformation.

Ensuring Accountability and Human Oversight

While LLMs demonstrate impressive capabilities, it is vital to maintain human oversight and accountability. Human reviewers and experts should be involved in the training, validation, and testing processes to ensure the accuracy and reliability of LLM outputs. Regular monitoring and auditing should be conducted to identify and rectify any errors or unintended consequences that may arise from LLM usage. Establishing robust mechanisms for accountability helps maintain trust in LLM technology and safeguards against potential harm.

Promoting AI Ethics in Organizations

Organizations adopting LLMs should prioritize promoting AI ethics within their internal processes and culture. This involves fostering a deep understanding of the ethical implications of LLM usage among employees and stakeholders. Regular training and education programs can help raise awareness of ethical considerations, responsible AI practices, and the potential impact of LLMs on individuals and society. Encouraging open dialogue and collaboration among diverse teams can further enhance ethical decision-making and ensure that LLMs are utilized in a manner that benefits everyone.

By actively addressing these ethical considerations and embracing responsible AI practices, organizations can mitigate risks, build trust, and create positive impacts with LLMs. Ethical and responsible use of LLMs not only ensures the integrity and fairness of AI systems but also contributes to the overall advancement and acceptance of AI technology in society.

Remember, as we harness the power of Large Language Models, we have a shared responsibility to use them in ways that align with ethical standards and serve the greater good. By prioritizing ethical considerations and responsible AI practices, we can unlock the full potential of LLMs while upholding the values and principles that guide us towards a better future.

It is essential to note that when employing LLMs for various generative AI tasks, responsible AI practices and ethical considerations are critical. And, in order to successfully implement Generative AI for your organization, we have created a detailed guide for those CIOs looking to remain ahead of the competition and revolutionize their business. Click below to read our thorough ebook: Generative AI for Business: The Ultimate CIO’s Guide

Generative AI for Business: The Ultimate CIO’s Guide

Read NowFuture Trends in Large Language Models

As we delve into the world of Large Language Models (LLMs), it’s essential to keep an eye on the horizon and anticipate the future trends that will shape this rapidly evolving field. Here are some key areas to watch out for:

Model Size and Complexity:

LLMs have already grown in size and complexity over the years, enabling them to generate more coherent and contextually accurate responses. However, we can expect even larger models with enhanced capabilities to emerge in the future. These advancements will empower LLMs to handle more nuanced language tasks and exhibit a deeper understanding of context.

Multilingual and Cross-Lingual Capabilities:

LLMs have shown remarkable potential in understanding and generating text in multiple languages. Future advancements are likely to focus on improving multilingual capabilities, allowing LLMs to seamlessly process and generate content across various languages. This will facilitate global communication and collaboration, breaking down language barriers in diverse domains.

Improved Contextual Understanding:

While current LLMs demonstrate impressive contextual understanding, future models will aim to enhance their ability to reason, infer, and comprehend complex linguistic nuances. By incorporating broader knowledge bases and domain-specific information, LLMs will become more adept at providing accurate and nuanced responses, making them even more valuable across a wide range of applications.

Domain-Specific and Task-Specific LLMs:

As LLMs continue to evolve, we can expect specialized models designed for specific domains and tasks. These domain-specific LLMs will be fine-tuned on relevant data and will offer highly tailored solutions for industries such as healthcare, finance, legal, and customer support. Task-specific LLMs will be trained to excel in specific applications like summarization, translation, or code generation, further expanding the practical utility of LLMs.

Enhanced Interactivity and Adaptability:

Future LLMs will likely exhibit improved interactivity, enabling more dynamic and interactive conversations with users. They will learn and adapt based on user feedback, allowing for personalized and contextually relevant responses. This adaptability will make LLMs more adaptable to individual user preferences, enhancing the overall user experience and engagement.

Responsible AI and Ethical Considerations:

The responsible use of LLMs will continue to be a critical focus in the future. As LLMs become more powerful and influential, it is imperative to address ethical considerations such as bias, fairness, transparency, and privacy. Striving for responsible AI practices will be vital to ensure that LLMs are deployed in a manner that benefits society as a whole.

Embracing these future trends in Large Language Models will unlock new possibilities and revolutionize the way we interact with language technology. Staying informed, adapting to emerging techniques, and maintaining a strong ethical foundation will be crucial as we navigate the exciting future of LLMs.

By keeping a finger on the pulse of these trends, your organization can stay ahead of the curve and harness the full potential of Large Language Models to drive innovation, enhance productivity, and create meaningful connections in the digital age.

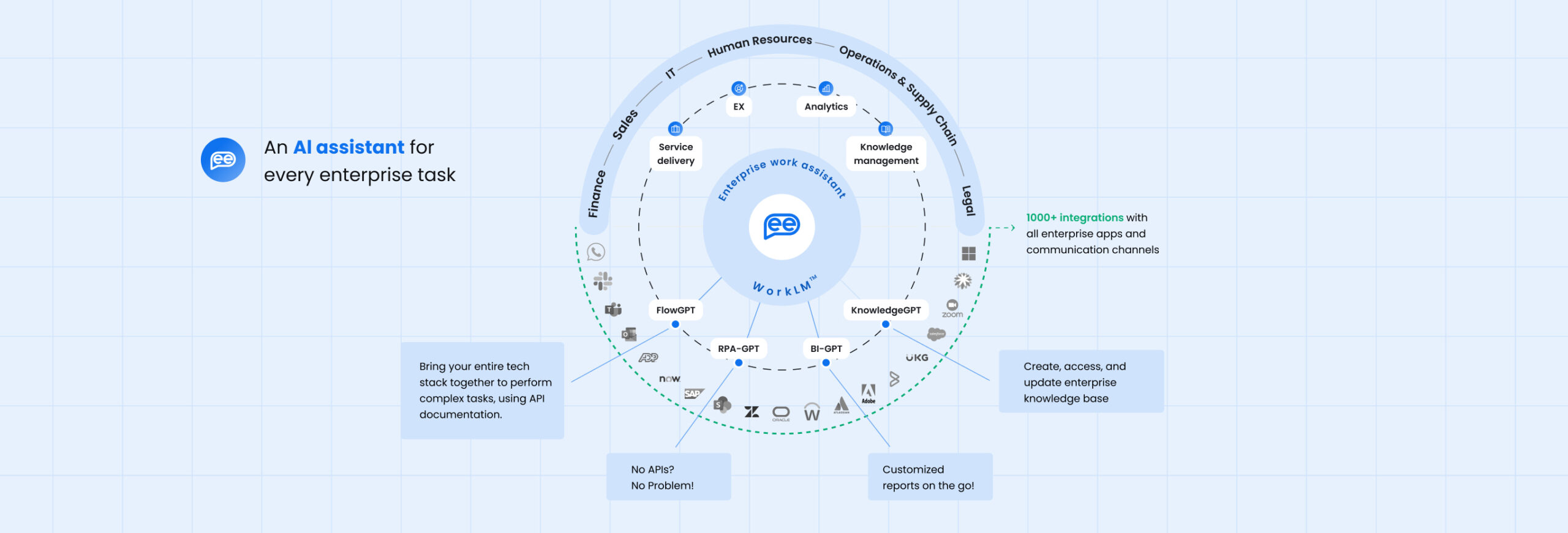

Leena AI WorkLMTM: A Next-Generation Language Model to Get Work Done

WorkLMTM symbolizes a leap forward in the application of AI within the workplace. It harnesses the power of advanced NLP techniques and extensive training data to provide businesses with an intelligent, adaptable, and effective tool for various use cases.

WorkLMTM Specifications

GPT-J Backbone

The GPT-J backbone provides WorkLMTM with an advanced predictive text generation capability, producing human-like responses in given contexts.

7B Parameters

Parameters in machine learning models denote the model’s learning capacity from data. With a substantial ‘brain capacity’ of 7 billion parameters, WorkLMTM is designed to understand and produce intricate text.

Proprietary Data

The power of WorkLMTM resides in its unique training dataset. This dataset, composed of 2TB of proprietary data, has been carefully collected and curated by Leena AI over the past seven years.

WorkLMTM Performance

Comparison with OpenAI GPT-3.5 Turbo

When considering enterprise tasks like automating helpdesk tickets, answering employee questions, creating integrations, publishing business intelligence, and automating tasks, WorkLM™ outperforms OpenAI GPT-3.5 Turbo significantly.

Security and Data Privacy

Leena AI is staunch in its commitment to maintaining the highest standards of security and data privacy. WorkLMTM is developed using data that Leena AI has collected from enterprises over the past seven years.

Applications of WorkLMTM

WorkLMTM is designed to serve as a potent tool to automate and optimize every enterprise initiative, across all departments.

WorkLMTM Benefits for Enterprises

Amplified Productivity

WorkLM™ empowers employees to automate tasks, generate personalized content, and streamline workflows, resulting in substantial time savings and heightened productivity levels.

Actionable Insights

Harness the power of WorkLM™ to analyze extensive enterprise data, extract valuable insights, and make data-driven decisions that drive business growth and innovation.

Enhanced Employee Experience

Leverage WorkLM™ intelligent capabilities to deliver personalized and efficient employee experience, improving satisfaction and fostering stronger employee enterprise relationships.

WorkLMTM Applications

Intelligent Virtual Assistant

Intelligent Virtual Assistant

Handling Complex Commands Across Multiple Systems

Handling Complex Commands Across Multiple Systems

Identifying Knowledge Gaps & Creating Knowledge

Identifying Knowledge Gaps & Creating Knowledge

Intelligent Robotic Process Automation (RPA)

Intelligent Robotic Process Automation (RPA)

Business Intelligence from Enterprise Data

Business Intelligence from Enterprise Data

Helpdesk Intelligence

Helpdesk Intelligence

Text Analysis

Text Analysis

Email Auto-completion

Email Auto-completion

Document Generation

Document Generation

Human-like Autonomous Agents

Human-like Autonomous Agents

Frequently Asked Questions

Define large language model.

A large language model refers to an advanced artificial intelligence system that has been trained on extensive amounts of text data to understand and generate human-like language. It can comprehend complex language patterns and contexts, enabling it to produce coherent and contextually relevant responses.

What are the different types of large language models?

There are different types of large language models, including transformer-based models like GPT, encoder-decoder models like BERT, autoencoder models like T5, and hybrid models that combine different architectural components. Transformer-based models, such as GPT-3, have gained significant attention for their ability to process and generate natural language. Encoder-decoder models like BERT are effective for language understanding tasks. Autoencoder models like T5 enable versatile text manipulation. Hybrid models aim to leverage the benefits of multiple approaches. These different types of models offer diverse capabilities for understanding, generating, and manipulating language data.

What are the top 5 large language models?

The top 5 large language models currently include GPT-3 (Generative Pre-trained Transformer 3), T5 (Text-To-Text Transfer Transformer), BERT (Bidirectional Encoder Representations from Transformers), GPT-2, and XLNet. These models have made significant advancements in natural language understanding and generation.

What is the largest language model?

Currently, GPT-3 holds the title for being one of the largest language models, with 175 billion parameters. However, it’s worth noting that the field of large language models is rapidly evolving, and newer models with even larger parameter counts may emerge in the future.

Why are large language models important?

Large language models are important as they enable advancements in natural language understanding and generation. They have numerous applications, including machine translation, chatbots, content creation, and information retrieval. These models possess the potential to enhance human-computer interaction and drive innovation in various industries.

What are the components of a large language model?

A large language model typically consists of an architecture, such as transformer-based models, that utilizes attention mechanisms to process and understand text data. It incorporates pre-training and fine-tuning processes, where it learns from massive amounts of data to acquire language understanding and generation capabilities.

How do you make a large language model?

Creating a large language model involves training a model on a vast corpus of text data using deep learning techniques. This process includes pre-training the model on a language modeling task to learn language patterns and then fine-tuning it on specific downstream tasks. The training process requires significant computational resources and data preparation.

Is ChatGPT a large language model?

Yes, ChatGPT is a large language model developed by OpenAI. It belongs to the GPT family and is designed to generate human-like responses to user prompts. ChatGPT has been trained on a diverse range of internet text and has the ability to engage in conversations, answer questions, and provide useful information.

What is the use case of large language model? What are large language models used for?

Large language models find applications in various domains such as customer support, content creation, virtual assistants, language translation, and information retrieval. They can assist in automating tasks, enhancing user experiences, and improving productivity in both personal and business settings.

How do large language models work?

Large language models utilize deep learning techniques and complex neural network architectures to process and understand language data. They learn patterns, contexts, and semantic relationships from the training data to generate coherent and contextually appropriate responses. The models leverage vast amounts of text data to develop language understanding and generation capabilities.

How are large language models trained?

Large language models are trained through a two-step process: pre-training and fine-tuning. Pre-training involves training the model on a large corpus of text data, where it learns to predict the next word in a sentence. Fine-tuning is performed on specific tasks using task-specific datasets to align the model’s behavior with the desired output. The training process requires substantial computational resources and extensive data preparation.

What is generative AI vs large language models?

Generative AI is a broader concept encompassing various types of creative content generation, while large language models specifically pertain to text generation tasks. Generative AI refers to a branch of artificial intelligence that focuses on creating new content, such as text, images, or music, without relying on pre-existing examples. On the other hand, large language models are specific types of generative AI models that are specifically designed to process and generate human-like text. They utilize vast amounts of pre-existing text data to learn patterns, grammar, and context, allowing them to generate coherent and contextually relevant sentences or paragraphs.

When to use custom large language models?

Custom large language models can be used in specific scenarios where there is a need for domain-specific or industry-specific language understanding. These models are trained on specialized data that aligns with the specific requirements of the application or organization. Custom models can capture nuances, jargon, and context specific to a particular domain, providing more accurate and tailored outputs.